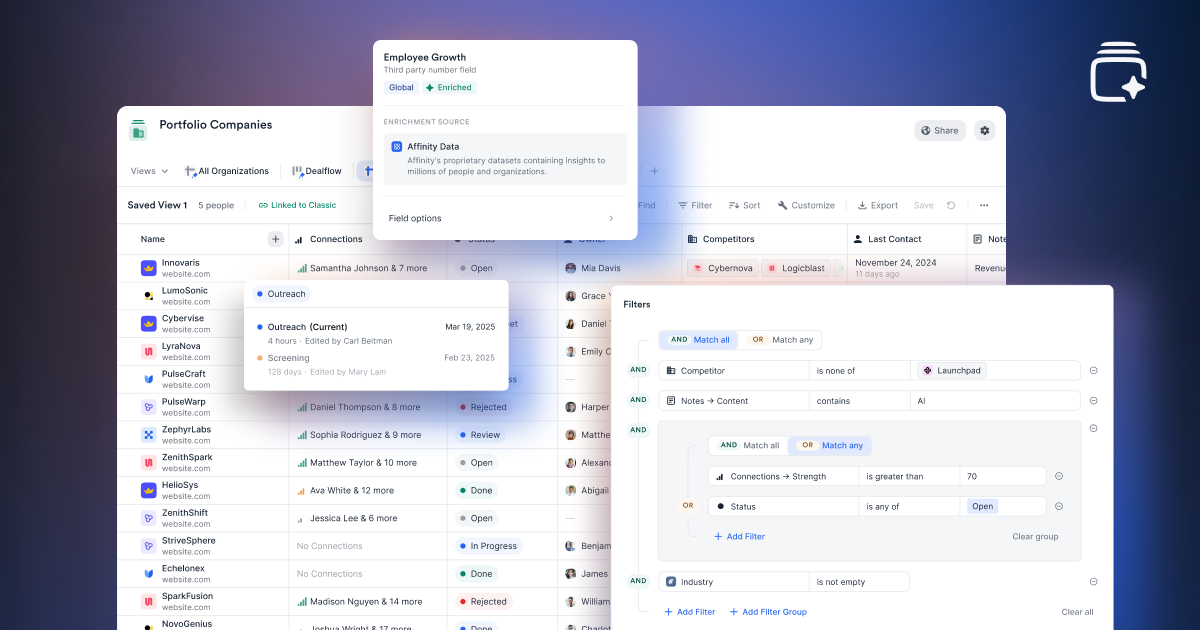

Missing firmographics, stale metrics, and manual CSV uploads bog teams down before diligence even starts. With Affinity’s API v2 you can stream trusted data—whether it lives in Snowflake, Preqin, or a proprietary feed—straight into the Affinity list fields your deal team already filters and reports on. Scheduled syncs keep every list entry—and any global profile fields you update—fresh, so views and dashboards always display the latest numbers.

Why custom data enrichment matters

When you can pull data from any source directly into Affinity, you layer in the exact signals your deal team relies on. That means they can spend less time hunting for headcount or ARR numbers and more time evaluating deals, while any dashboards or LP reports that reference the same list automatically pick up the fresh data on their next refresh.

“Having a single source of truth is super important, and a single source of truth that's extendable is doubly important. The way that Affinity has architected data, especially with API v2, has made things incredibly straightforward. Integrating Affinity is now a requirement for new technologies we consider.” — Abhishek Lahoti, Head of Platform at Highland Europe

Popular use cases

Data-warehouse sync. Many firms store portfolio metrics, such as ARR or burn rate, in data warehouses like Snowflake. A nightly job fetches those figures and patches them to the right list fields via the update-fields API so your team opens Affinity to fresh data—no CSV uploads required.

There are three primary ways to move data from a data warehouse into Affinity:

- Map with a reverse-ETL tool (Hightouch, Census): Point-and-click mapping pushes Snowflake columns to Affinity field IDs on a schedule

- Trigger a serverless function (AWS Lambda, Cloud Functions): Drop your nightly export in S3 or GCS, and let the function call the

update-fieldsAPI automatically - Run a scheduled script (Python, Node): Query the warehouse, then batch up to 100 field updates per request; schedule it with cron, GitHub Actions, or Cloud Scheduler for hands-off runs

Post-investment monitoring. Once a deal closes, you can pipe ESG scores or other health indicators straight into your portfolio company list from tools like your internal data warehouse or a platform like Vestberry. With this data in Affinity, you can spin up a “Health Monitor” dashboard view that filters for companies falling below key thresholds, so your team can spot at-risk companies early.

Specialist enrichment. Some sourcing teams rely on highly specific signals like Preqin’s ownership data or niche market-share rankings. Map those attributes to custom fields and keep them current via the update-fields API endpoint, turning Affinity into a unified hub where relationship history sits alongside the specialty intel that drives decisions.

{{api-newsletter="/rt-components"}}

What you need to get started with Affinity’s API v2

- An Affinity plan with API v2 access

- A user with "Generate an API key" and "Export data from Lists" permissions

- Your personal API key (Bearer token)

- Credentials for your enrichment provider or warehouse

- The List ID, listEntryIds, and Field IDs you plan to update

- An optional middleware tool to manage syncs without writing code

How it works in four quick steps

1. Grab your API credentials

In Settings → API, click Generate an API key. Pass the key as a Bearer token on every request. Store it somewhere secure since this API key is only visible once!

2. Identify your target list and fields

Copy the List ID from the list’s URL, then call GET /v2/lists/{listId}/fields to retrieve each field’s id, name, and type.

Here is an example of the response this endpoint will return for a list containing a custom field called Revenue. Please note that the ID field (e.g. field-5134) will be used in later steps.

{

"data": [

{

"id": "field-5134",

"type": "list",

"enrichmentSource": null,

"name": "Revenue",

"valueType": "number"

},

... list of fields continue...

],

"pagination": {

"prevUrl": null,

"nextUrl": null

}

}3. Fetch fresh data from your provider

Query your warehouse or enrichment API, then map each new value to a {listEntryId, fieldId} pair.

If your warehouse already stores Affinity’s company IDs, use those to match records directly. Otherwise, you’ll need to look up each company using a shared identifier—domain is usually the most reliable option.

4. Write updates back to Affinity

Send one PATCH /v2/lists/{listId}/list-entries/{listEntryId}/fields request using operation: "update-fields"—you can include up to 100 field updates per call.

Quick demo (bash):

#!/bin/bash

Replace the placeholders below with real values.

AFFINITY_API_KEY="your-api-key"

LIST_ID="your-list-id" # ← Step 2

ENTRY_ID="your-list-entry-id" # ← Step 3

curl -X PATCH -H "Authorization: Bearer $AFFINITY_API_KEY" -H "Content-Type: application/json" -d '{

"operation":"update-fields",

"updates":[

{"id":"field-36590","value":{"type":"number","data":1250}},

{"id":"field-35901","value":{"type":"text","data":"Series B"}}

]

}' "https://api.affinity.co/v2/lists/${LIST_ID}/list-entries/${ENTRY_ID}/fields"Rule of thumb: update a global field if you want the new value to appear on every profile and list; use a list-specific field when the data matters only inside one view.

For large backfills, batch update until you receive a 429 “Too Many Requests” error, then pause for as long as the returned header indicates.

Ready to build?

Start with a small test list, confirm your writes, then schedule the job to run nightly or on every new deal you evaluate. Explore the full API v2 docs for additional examples and tips.

We’re continuing to expand what’s possible with API v2 so your team can move faster, automate more, and integrate with the tools you rely on. Explore how the API is evolving and join our API newsletter to stay up-to-date on the latest changes to our integrations and APIs.

{{api-newsletter="/rt-components"}}

.png)

.webp)